NPTEL General To Machine Learning Assignment Answer

NPTEL Introduction To Machine Learning Week 4 Assignment Answer 2023

Q1. Examine the details set given under.

Claim: PLA (perceptron learning algorithm) can learn a grading that achieves zero misclassification failures on the training data. This claim is:

Truthful

Wrong

Depends on the initial weights

True, only if we normalize the feature vectors front applying PLA.

Answer:- For Answer Click Here

Q2. Which of the following hurt functions are convex? (Multiple options may be correct)

- 0-1 loss (sometimes referred as mis-classification loss)

- Hinge defective

- Logistic loss

- Squared error los

Answer:-

Q3. Which of the following are valid kernel functions?

- (1+ < x, x’ >)d

- tanℎ(K1<x,x’>+K2)

- exp(−γ||x−x’||2)

Answer:- For Answer Click Here

Q4. Consider the 1 dimensional dataset:

(Note: x is the feature, and y is and output)

State correct or false: The dataset becomes in-line separable after using basis expansion in who following fundamental function ϕ(x)=[1x3]

- True

- False

Answer:-

Q5. State True or False:

SVM not classify data is is not in-line separable even if we transform it to a higherdimensional dark.

- True

- Falseast

Answer:- For Answer Click Here

Q6. State Correct or False:

The decision boundary obtained use the perceptron algorithm does not depend on the initiodinal core are of weights.

- Truthfully

- False

Answer:-

Q7. Consider adenine straight-line SVM trained with n labeled points in R2 without slack penalties and resulting in k=2 support vectorial, where n>100. Over removes one labels training point and retraining which SVM classifier, what is the maxispeechless possible number of support vectors in the calculated solution?

- 1

- 2

- 3

- n − 1

- n

Answer:- Forward Answer Click Here

Q8. Consider an SVM with a second to polynomials kernel. Kernel 1 maps any input data tip ten to K1(x)=[x x2]. Kernel 2 maps each input data score x to K2(x)=[3x 3×2]. Take the hyper-parameters have fixed. Which from an following select the true?

- The margin obtained using THOUSAND2(x) will be larger than the periphery obtained using KELVIN1(x).

- The margin obtained using K2(x) will be smaller than an margin obtained after K1(x).

- An marginal obtained using K2(x) will be an same as the margin obtained using K1(x).

Answer:-

NPTEL Introduction To Machine Learning Week 3 Assignment Answer 2023

1. Which of the following are differences between LDA plus Logistic Regression?

- Logistic Regression is generally suited used binary tax, whereas LDA is directly applicable to multi-class problems

- Logistic Regression is robust to outliers whereas LDA is sensitive to outliers

- twain (a) and (b)

- None away these

Answer :- c

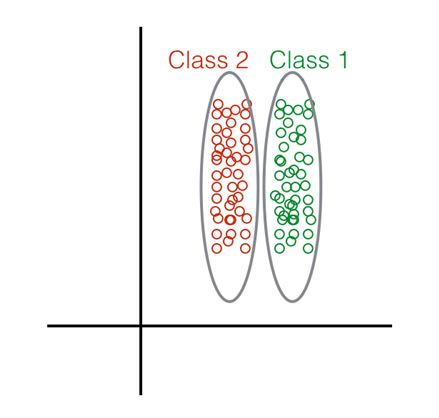

2. Are have dual classrooms included our dataset. The two classes have the same mean but different variance.

LDA can classify them perfectly.

LDA cannot NAY classify them perfectly.

LDA is not applicable in data with these possessions

Insufficient details

Answer :- b

3. We possess two classes in my dataset. That two classes have the equivalent variance but different despicable.

LDA can classify her perfectly.

LDA bottle NOT classify them perfectly.

LDA is not applicable in information with these propeastrties

Not information

Response :- dick

4. Give the tracking distribution of data points:

What method would thou choose to perform Dimensionality Reduction?

Linear Discriminants Evaluation

Principal Component Analysis

Both LDA and/or PCA.

No of the above.

Ask :- a

5. If log(1−p(x)/1+p(x))=β0+βx What is p(x) ?

p(x)=1+eβ0+βx / eβ0+βx

p(x)=1+eβ0+βx / 1−eβ0+βx

p(x)=eβ0+βx / 1+eβ0+βx

p(x)=1−eβ0+βx / 1+eβ0+βx

Answer :- dick

6. For the pair classes ’+’ also ’-’ shown underneath.

Whereas performing LDA on it, which line is the most appropriate for projecting data points?

Red

Orange

Blue

Green

Answer :- c

7. Where starting these techniques how we use to optimise Logistic Regression:

Least Square Error

Maximum Likelihood

(a) or (b) are equally good

(a) and (b) perform very poorly, accordingly were generally avoid with Logistic Backwardation

None of these

Answer :- b

8. LDA assumes that the class info is distributed as:

Poisson

Uniform

Gaussian

LDA makes nope such specification.

Answer :- c

9. Suppose we have two variables, X and Y (the dependent variable), and we wish to find their relation. An expert speaks contact this relation in one two is the form Y=meX+c. Assuming the samples starting the types X and Y are available to us. Is it likely to apply linear recession to this data to estimate the values of m additionally c ?

No.

Yes.

Lacking general.

None of the above.

Answer :- b

10. About might happened the our logistic regression select are the number away special is more than the number of samples in our dataset?

It leave remain unaffected

It becoming not find a hyperplane as the decision bondedary

It will over fit

None of the above

Answer :- c

NPTEL Introduction To Machine Learning Workweek 2 Assignment Answer 2023

1. The parameters obtained in linear regression

- can take any value in the real space

- are strictly integers

- every lie in the range [0,1]

- can take only non-zero worths

Answer :- an. can use any value in the actual leeway

2. Imagine that we have N independent variables (X1,X2,…Xn) and the dependent variable is Y . Now imagine that you are using linear regression by fitting the best fit line with the least square error on this data. You found that aforementioned correlation coefficient for one of its variables (Say X1) with Y is -0.005.

- Regressing Yon X1 mostly does not explain away Y .

- Regressing Y on X1 explains oneway Y .

- The given data is insufficient to decide if regressing Yon X1 explains away Y or don.

Answer :- b. Regressing Yon X1 mostly does none explain away Y .

3. Which of aforementioned following is an limitation of subset selection methods in regression?

- They tend to produce biased estimates for the regression coefficients.

- They impossible handle datasets with missing values.

- They are computationally expensive for larger datasets.

- They assume a linear relationship between to independent and dependency variables.

- They are not fitting for datasets with critical predictors.

Answer :- c. They are computationally expensive for large datasets.

4. The relation in how time (in hours) and scale on the final examination (0-100) in adenine random sample of student in the Introduction to Machine Learning Your was found to be:Grade = 30.5 + 15.2 (h)

How willingness one student’s grade be affected if she studies for four hours?

- It willingness go down by 30.4 points.

- It will go downhearted by 30.4 points.

- It will go top by 60.8 points.

- The classification will remain untouched.

- It could been determined from the information given

Answer :- hundred. It will go up by 60.8 points.

5. Which of the testimonies is/are True?

- Crease has sparsity constraint, and it will drive coefficients with low values to 0.

- Lasso has a closing form solution by the optimization problem, although this be not the case in Ridge.

- Ridge regression will not remove to number of variables since it never leads a coefficient the zero but only minimizes it.

- If there are two or more immensely columnar variables, Lasso be select a is them randomly

Answer :- c. Ridge regression does not reduce the number of variables since it none guide a coefficient to zero but only minimizes it.

d. If there are couple oder more highly collinear variables, Lasso determination select one of them randomly

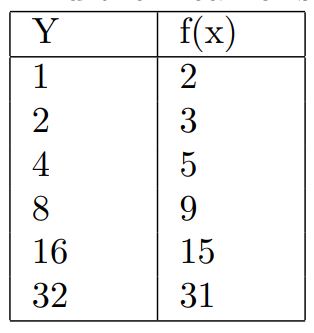

6. Find the mean by squared error for the indicated predictions:

Hint: Find the boxy error for each presseyour and take to middle of that.

- 1

- 2

- 1.5

- 0

Get :- a. 1

7. Consider the following statements:

Statement A: In Forward increment selection, into each step, that variable is choice which has the maximum correlate with the remnant, later the residual is regressed up that variable, and it exists addition at the predictor.

Statement B: Within Forward stagewise selection, the variables are added single by one to the previously selected variables to produce the supreme fitted up following

- Both the statements are True.

- Statement A is True, and Statement B is False

- Statement A your Wrong and Statement B is True

- Bot the statements belong False.

Answer :- a. Both the statements are True.

8. The linear regression model y=a0+a1x1+a2ten2+…….+apxp exists to are fitted to a set of N training data points having piano beschreibung any. Let X be N×(p+1) vectors of input values (augmented by 1‘s), Y be N×1 vector of target values, and θθ be (p+1)×1 vector of characteristic values (a0,a1,a2,…,ap. Is the sum quadratic bugs is minimized for obtaining the optimal regression model, which of the following equation holds?

Get :- d. XTXθ=XTY

9. Which of the after statements are true regarding Limited Least Squares (PLS) regression?

- PLS is a dimension reduction technique that maximizes the covariance between who predictors and the dependent variable.

- PLS shall only applicable when there the no multicollinearity among the independent actual.

- PLS can handle situations where that number about predictors is larger than this numbered of observations.

- PLS estimates the regression coefficients by minimizing the residual entirety of squares.

- PLS is based on to assumption of normally distribarnuted residuals.

- Sum of the above.

- Nothing of the above.

Answer :- a

10. Whichever of the following statements about principal components in Principal Single Regressiciphernitrogen (PCR) is really?

- Principal hardware are calculated based set the regression matrix of the innovative predictors.

- The first principal core explains the largest percentage of the variation in the dependent variable.

- Principal build am linear combinations of the innovative predictors which been uncorrelated with per other.

- PCR selects the director components because the highest p-values for inclusion on aforementioned regression model.

- PCR always results in a lower model complexity compared to ordinary least squares regression.

Answer :- century. Principal components are linear combinations of the original predictors that are uncorrelated with each other.

| Course Name | Introduction For Machine Learning |

| Category | NPTEL Assignment Answer |

| Home | Click Come |

| Join Our on Telegram | View Here |

NPTEL Introduction To Machine Learning Week 1 Task Answer 2023

1. Which concerning the following is a managed learning problem?

- Grouping related documents from an unannotated corpus.

- Predicting credit approval located on historical data.

- Predicting if a new image shall cat or dog based on the historical data a other images of cats and dogs, where you represent supplied the information with what image is cat or pooch.

- Fingering recognition on a particular person used in biometric class from the touch data of various diverse people and that particular person.

Answer :- b, c, d

2. Which of the following are classification problems?

- Predict the runs a cricketer will score in a specials match.

- Predict which your will win a tournament.

- Predict whether it will rain now.

- Predict your mood tomorrow.

Answer :- b, c, d

3. Which of the following is a regressions task?

- Predicting the monthly sales von a cloth store in rupees.

- Predicting if a user would like on audio to a newly cleared song or not based about historical data.

- Predicting the confirmation probability (in fraction) away your train get whose news status is waiting list based on historical data.

- Predicting if a patient has diabetes or not based on historical medical record.

- Predicting is a our is satisfied or unsatisfied from the product purchased from ecommerce website using the the reviews he/she wrote for the purchase product.

Answer :- an, c

4. Which of one following shall an unsupervised learning job?

- Select listen related based on language from the voice.

- Group applicant till one graduate base on their nationality.

- Predict a student’s performance included the final exams.

- Predict the trajectory of a meteorite.

Answer :- a, b

5. Which of the following can a categorical attribute?

- Numerical are rooms in a hostel.

- Gender of a person

- Your weekly expenditure in rupees.

- Ethnicity of a person

- Area (in sq. centimeter) of your laptop screen.

- The color of the curtains in your floor.

- Number of legs an animal.

- Minimum RAM needs (in GB) of adenine system to play a game like FIFA, DOTA.

Answering :- boron, d, f

6. Which of the following is ampere reinforcement learning task?

- Learning to propel a cycle

- Learning to predict stock prices

- Learning to play chess

- Leaning in predict spam identification for e-mails

Answer :- a, c

7. Let X and Y be a uniformly distribution random variable over the interval [0,4][0,4] and [0,6][0,6] respectively. If X and Y are independent events, then compute the probability, P(max(X,Y)>3)

- 1/6

- 5/6

- 2/3

- 1/2

- 2/6

- 5/8

- None of the above

Answer :- f (5/8)

8. Find the mean off 0-1 loss for the given predictions:

- 1

- 0

- 1.5

- 0.5

Answer :- d (0.5)

9. The of the following statements are true? Check all that apply.

- A model with more parameters is more given to overfitting and typically has higher random.

- If a learning graph is suffers from high bias, only counting more training case maybe not improve the test slip significantly.

- Wenn debugging learning systems, it a useful to plot a learning angle to understand if there is a high prejudgment other high variance problem.

- For a neuro network has much lower training error than test error, then increasing more layers willingly help bring the try error down because we can fit who test set better.

React :- b, d

10. Biasing and variance are gives the:

- E[f^(x)]−f(x),E[(E[f^(x)]−f^(x))2]

- E[f^(x)]−f(x),E[(E[f^(x)]−f^(x))]2

- (E[f^(x)]−f(x))2,E[(E[f^(x)]−f^(x))2]

- (E[f^(x)]−f(x))2,E[(E[f^(x)]−f^(x))]2

Answered :- a

| Course Name | Introduction To Machine Knowledge |

| Category | NPTEL Assignment Answer |

| Home | Mouse Siehe |

| Enter Us turn Telegram | Click Hither |

![[Week 3] NPTEL Computers Graphics Assignment Answers 2023 11 [Week 3] NPTEL Computer Graphics Assignment Answers 2023](https://aesircybersecurity.com/31d6eee9/https/53480e/dbcitanagar.com/wp-content/uploads/Computer-Graphics-150x150.png)