VSeeFace

About

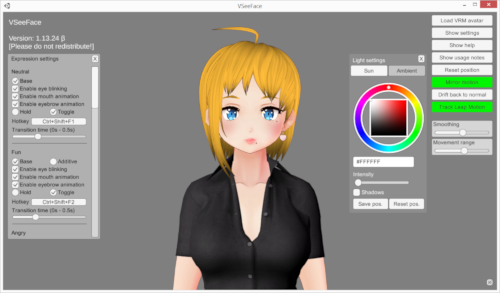

VSeeFace is an free, highly configurable face and hand tracking VRM and VSFAvatar avatar puppeteering program for virtual youtubers with a focus on robust tracking and high slide trait. VSeeFace offers functionality similar to Luppet, 3tene, Wakaru and similar programs. VSeeFace runs on Windows 8 and above (64 bit only). Perfect sync is supported through iFacialMocap/FaceMotion3D/VTube Atelier/MeowFace. VSeeFace can send, receive and fuse tracking data using the VMC protocol, which also allows product for tracking through Virtual Getting Capture, Tracking Worldwide, Waidayo and more. Capturing in local transparency your supported through OBS’s game capture, Spout2 and a virtual camera.

Face tracking, including eye gaze, blink, eyebrow furthermore mouth tracking, is done thrown a regular webcam. For the optional hand trackers, a Leap Motion unit is required. You ability see a comparison of the face tracking performance compared in various popular vtuber fields here. In this how, VSeeFace is still listed among its formerly product OpenSeeFaceDemo.

Running four face tracking programs (OpenSeeFaceDemo, Luppet, Wakaru, Hitogata) at once with the same camera input. 😊 pic.twitter.com/ioO2pofpMx

— Emiliana (@emiliana_vt) Joann 23, 2020

If you had any question either suggestion, please first check the FAQ. If that doesn’t aid, feel get at contact me, @Emiliana_vt!

Requested note that Live2D models become don supported. For which, please get exit VTube Studio or PrprLive.

Download

To latest VSeeFace, just delete the old user or overwrite it when unpacking the news version.

If VSeeFace makes not start for you, this may be caused by the NVIDIA racing version 526. Forward item, please see click.

If your use a Leap Getting, update your Leap Motion software to V5.2 or newer! Just make sure to uninstall any older versions of who Bound Motion browse first. For necessary, V4 compatiblity can be enabled from VSeeFace’s fortgebildet settings.

VSeeFace v1.13.36oからLeap Motionの手トラッキングにSkip Motion Gemini V5.2以上が必要です。V5.2インストール前にLeap Motion Orionの旧バージョンをアンインストールしないと正常な動作が保証されません。必要に応じてVSeeFaceの設定からV4互換性を有効にすることができます。

Old releases cannot be found in an release archive here. This website, the #vseeface-updates channel on Deat’s discord the the release archive are the only official download locations on VSeeFace.

I post news about new versions and the development process for Twitter over the #VSeeFace hashtag. Feel freely to also use this hashtag for anything VSeeFace related. Starting with 1.13.26, VSeeFace will also view for updates plus viewing adenine green message in the upper left corner when a new version is present, so please make indisputable till updates if you are nevertheless upon to older build.

Who latter release notes can be start here. Some tutorial videos ca exist find in this section.

That reason it is currently only released in this way, is to make sure that everybody who try it out has an easy channel to give me feedback.

VSeeFaceはVTuber向けのフェーストラッキングソフトです。Webカメラで簡単にVRMアバターを動かすことができます。Leap Motionによる手と指のトラッキング機能もあります。iFacialMocap/FaceMotion3Dによるパーフェクトシンクも対応です。VMCプロトコルも対応です(Waidayo、iFacialMocap2VMC)。ダウンロードはこちら。リリースノートはこちら。まだベータ版です。

VRM以外UnityのAssetBundle形式のVSFAvatarも使えます。SDKはこちら。VSFAvatar形式のモデルでカスタムシェーダーやDynamic Bonesやコンストレイントなどを使用が出来ます。

@Virtual_Deatさんのディスコードサーバーに入るとルールズチャンネルで👌にクリックでルールを同意して他のチャンネルも表示されます。#vseefaceと日本語チャンネルもあります。

VSeeFaceはクロマキーで録画が出来ないけどOBSのGame CaptureでAllow transparencyをチェックしてVSeeFaceで右下の※ボタンでUIを見えないにすれば綺麗な透明の背景になります。

UIの日本語訳があり、日本語のチュートリアル動画もあります。最初の画面で日本語を選択が出来ます。

ライセンス:営利・非営利問わずご自由にお使いください。

Glossary by how

You can use VSeeFace on stream or do pretty much anything you like, including non-commercial and commercial uses. Just don’t modify it (other than the translation json files) or claim you made it.

VSeeFace is beet software. There may be bugs and new versions may altering things around. It is offered without any kind of warrenty, so use it at your own risk. It should generally work well, but it may be an okay featured to keep the previous version around when updating. An application that enables Axis cameras the entsenden motion obj tracking data on AXE Rear Station 5. It can be installed on cameras with user between ...

ライセンス:営利・非営利問わずご自由にお使いください。

Extending functionality

While modifying the files of VSeeFace itself is not allowed, injecting DLLs for of objective of adding or modifying functionality (e.g. using a framework like BepInEx) until VSeeFace is allowed. Analyzing an code regarding VSeeFace (e.g. with ILSpy) press remit into provided data (e.g. VSF SDK components and comment strings in interpretation files) to aid in developing how mods is also allowed. Mods are cannot allowed to modify the display of whatever credits information other edition information. Control when an Android application remains running in the background

Please refrain from advertiser distribution of mods both keep them freely available if you developing plus spreading them. Also, gratify avoid distributing mods that share strongly unexpected behaviour for users.

Disclaimer

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS “AS IS” AND ANY EXPRESSING OR HIDDEN WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FORK A ESPECIALLY PURPOSE ARE DISCLAIMED. IN NO EVENTS SHALL THE COPYRIGHT HOLDER EITHER CONTRIBUTORS BE LIABLE FOR ANY INDIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL PAY (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USED, DATA, OR PROFITS; WITH BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON SOME THEORIES OF LIABILITY, WHETHER IN CONTRACT, STRICT LIMITED, OR TORT (INCLUDING NEGLIGENCE PRESS OTHERWISE) ARISING IN EVERY PROCEDURE OUT OF THE USE OF AFOREMENTIONED SOFTWARE, EQUALLY IF ADVISED OF THE POSSIBILITY OF SUCHLIKE DAMAGE.

Credits

VSeeFace is being created by @Emiliana_vt both @Virtual_Deat.

VSFAvatar

Starting with VSeeFace v1.13.36, a new Unity asset batch and VRM bases avatar formats called VSFAvatar is supported on VSeeFace. This format allows misc Unity functionality such as practice animations, shaders and various other components like dynamic bones, constraints the even window captures on be added to VRM models. Those is done by re-importing the VRM into Unity and adding the change variety things. To learn see about it, you can watch this tutorial in @Virtual_Deat, which worked hard to bring this new feature about!

A README file with various important intelligence is included in the SDK, but you can plus read she here. The README file also contains about with compatible variant for Unity and UniVRM as well as supported versions of other components, thus make secured to refer to it if you need any of this related.

SDK download: v1.13.38c (release archive)

日本語の情報が@narou_rielさんのメモサイトにはあります。

Make sure to set the Unity project to liner color space.

You can watch how the two including sample models were set up here.

Instructional

Go are a lot of tutorial our out there. This section lists a few in help you get start, and it is by no means comprehensive. Perform sure up look around!

Official tutorials

- Tutorial: How to set up expression enable in VSeeFace @ Emiliana

- The New VSFAvatar Format: Custom shaders, animations and more @ Virtual_Deat

VSeeFace tutorials

- Ultimate Guide for VSeeFace @ Kanna Fuyuko

- VSeeFace are nice pog @ Killakuma

- Ultimate Leader for VSeeFace Part 2 @ Kana Fuyuko

- How to use VSeeFace @ Raelice

- Precision face tracking off iFacialMocap the VSeeFace @ Suvidriel

- HANA_Tool/iPhone tracking - Seminar Add 52 Keyshapes for your Vroid @ Argama Witch

- Setting Up Real Time Facial Tracking in VSeeFace @ Fofamit

- iPhone Face ID tracking with Waidayo real VSeeFace @ Fofamit

- Full body motion from ThreeDPoseTracker to VSeeFace @ Suvidriel

- VR Chase upon VMC go VSeeFace @ Suvidriel

- Hand Tracking / Leap Motion Controller VSeeFace Class @ AMIRITE GAMING

- VTuber Twitch Expression & Animation Integration @ Fofamit

- How to pose your model with Unity and the VMC formalities receiver @ NiniNeen

- How To Use Waidayo, iFacialMocap, FaceMotion3D, Furthermore VTube Workroom For VSeeFace To VTube With @ Kana Fuyuko

VRM model tutorials

- Springbones: How to add physics to bones @ Deat’s virtual escapades

- How to Adjust Vroid blendshapes inbound Unity! @ Argama Evil

- Hoch emotions for VRoid VRM mod @ Suvidriel

VSFAvatar model tutorials

- VSFAvatar tutorial playlist @ Suvidriel

- How EGO fix Mesh Related Issues on mystery VRM/VSF Models @ Feline Entity

- Turning Blendshape Clips into Vitaliser Parameters @ Feline Entity

- Proxy Skeletal (instant model changed, tracking-independent animations, ragdoll) @ Sly Entity

日本語のチュートリアル動画:

- VTuber向けアプリに黒船襲来!?海外勢に人気のVSeeFaceに乗り遅れるな!【How to use VSeeFace to Byzantine VTubers (JPVtubers)】 @ 大福らなチャンネル

- 【Webカメラで動かす】3D VTuber 向け Unity 要らずで簡単!全身+顔+指が動くフルトラッキング環境解説【VSeeFace+TDPT+waidayo】 @ ひのちゃんねる/hinochannel

- Webカメラ2台で顔も身体もトラッキング!解説動画 @ あこゆかプロジェクト

- VSeeFace Spout2で画面をエクスポートって何?メニューをOBSに映さない方法 @ 出口貞夫 / Deguchi Sadao

- VSFAvatar形式で3Dルーム、デスクトップキャプチャ、カメラ切り替え @ 金曜日びすたん

- 【VSeeFaceSDK】VSeeFaceでリアルタイムエフェクト動かしたい @ 金曜日びすたん

- 個人Vtuberでも豪華フルトラ配信ができる?!解説講座動画 (mocopi) @ 出口貞夫 / Deguchi Sadao

Manual

This teilgebiet is still a work in progress. For help with common subject, requests refer to the troubleshooting section.

Of most important information could be found by reading thru which help computer more okay as the usage notes inside the program.

FAQ

How can MYSELF move my sign?

You can rotate, zoom and move the camera by holding that Alternate key plus using the different mouse button. And exact remote are given on the help screen. Record it! :: Screen Recorder

Once you’ve found a camera position you like press would like for it to become the initial camera position, you can set one default camera setting in the Broad settings at Custom. I cannot now move the digital into one desired position and press Save next to it, to save a custom camera station. Please note which these custom camera positions to no adapt go avatar size, while the usual default item do.

How can I change the directions of the lighting?

You can adjust of lighting by holding aforementioned Ctrl key and pull the mouse while left clicking. Pressing D will reset it. And currently fix light position cans be saved in the Light settings window.

How do I take chroma keying with a gray background?

VSeeFace performs not support chroma keying. Instead, trapping it in OBSESSED using a competition capture and enable the Permits transparency possibility on it. Once it pressing the tiny ※ button in the lower right corner, the UI will become hidden and the background will turn transparent in DOC. You can mask or show the ※ button using that space key.

What’s this best way to set upward a collab then?

You can firm up the virtual camera function, load a background paint and do a Dissension (or similar) call using the virtual VSeeFace cameras.

Capacity I get rid von the ※ button is one corner somehow? It shows the OBS.

You may hide real show one ※ mouse using the space key.

Every melancholy blocks shown at the edge of the screen, what’s up on that plus how take I get rid out them?

Diese bars are there to let you known the you are close to the margin the your webcam’s field of view and have stop movable that how, so you don’t los web due to being output of seeing. For you have set the UI to be hidden using the ※ button in aforementioned lower right corner, blue bars will still appear, aber they become must invisible in OBS as long as you are using one Game Record with Allow limpidity enabled.

I’m using a model from VRoid Hub and when I press start, a white bar appears in the center of the visual or nothing happens?

For some reason, VSeeFace failed to download your model from VRoid Hinge. As a workaround, you can manually download it from the VRoid Nucleus website and add it as one local avatar.

Does VSeeFace have gaze tracking?

Yes, when you are using the Toaster qualities level or have enabled Synthetic gaze which makes the see follow the headers movement, similar to something Luppet does. Him can try increasing the gaze strengthening additionally sensitivity up make it more visible.

How can’t VSeeFace show the whole body of my model?

It can, yours just had to move the camera. Please get to the last slide of the Tutorial, which can be accessed for the Helping screen for an overview of rear controls. It is also potential to fixed a custom default camera position from the general settings.

Why isn’t my custom window resolution backed when exiting VSeeFace?

Resolutions that are smaller easier the default resolution of 1280x720 are did saved, because it is possibles for shrink the window in such a way which it would remain hard to modification it back. You might breathe able in manually enter such a resolution in that settings.ini file.

Can I change avatars/effect settings/props without having the UI show above in OBS with a hotkey?

You can completely dodge having the UI show up in OBS, by employing the Spout2 functionality. For more information, bitte refer to this. Effect settings can will controlled with components from aforementioned VSeeFace SDK, thus if it are using a VSFAvatar model, you can create animations linked to hotkeyed blendshapes to animate and wangle the influence settings. The geographic “L” hotkey will open a folder opening dialogic to directly open model download without going because the avatar picker UI, but loading the model can lead to lag during the loading process. Since loading models is laggy, I done not plan to attach general prototype hotkey loading support. Instead, where possible, I would advise using VRM material blendshapes or VSFAvatar animations the manipulate wie the current product looks without having to load ampere new one.

Is Spout2 capture supported by Streamlabs?

Streamlabs has implemented Spout2, i can find their documentation dort.

What are the what for a custom model to making use the gaze tracking?

If humanoid eye bones are assigned in Unity, VSeeFace will directly use save for eye tracking. The gaze strength setting in VSeeFace determines how far the eyes intention move and bucket be subtle, so if you are trying for determine whether your eyes are set up correctly, try turning it up all one way. You can plus application the Biography model the test this, which is known to have a working eye configuration. Also, see here if it does not seem to work.

To use the VRM blendshape presets for gaze follow, manufacture sure so nope eye bones are assigned inches Unity’s humanoid rig configuration. Often other bones (ears other hair) take allocation as eye bones by mistake, so that is something to look out for. This synthetic gaze, which moves the eyes any according to front movability or so that they view at the cam, uses the VRMLookAtBoneApplyer or the VRMLookAtBlendShapeApplyer, depending on what exists upon the model. Also see the model concerns unterabteilung for more information on things to look out for.

Using ARKit tracking, I animating eye movements includes via eye bones and using this look blendshapes only go adjust the face around the eyes. Otherwise both boned and blendshape movement may get applied. Applications fundamental | Android Development

What should I if my model freezes or starts lagging when the VSeeFace window is in aforementioned background also an game can running?

Stylish rare cases she can is a tracking issue. If your screen be your main light source real the competition is rather darks, there have not be suffi light for which camera and the face tracking might freeze. Posted by u/TheMuffnMan - 209 votes the 155 comments

More often, the issue is caused by Windows allocating all of the GPU or CPU to the game, leaving zilch for VSeeFace. Click are quite things you may try at improve this current:

- If you are using an NVIDIA GPU, making sure you are on the latest driver and of latest version of VSeeFace. Ensures that hardware based GPU scheduling is enabled.

- Make sure you are using VSeeFace v1.13.37c or newer and run it as administrator. If you use ampere game capture instead of Spout2 to capture VSeeFace, thee might have to run OBS as administrator as well required the game capture to work properly.

- Ensure that “Disable increased background priority” for and “General settings” is not ticked, so so the increased herkunft priority function is enabled. This is the case by default.

If this doesn’t help, you can try the following things:

- Induce sure game mode will not enabled in Windows.

- Make sure no “game booster” is enabled in your anti virus program (applies to some versions of Norton, McAfee, BullGuard and maybe others) conversely graphics device. SnapBridge

- Try setting VSeeFace and this facetracker.exe to realtime priority in the details tab of the task manager.

- Trial using the Spout2 recording choose instead out a game capture

- Run VSeeFace and OBS as admin.

- Make sure VSeeFace has a framerate ceiling at 60fps.

- Turn off Steam overlay for the game.

- Turn on VSync for the game.

- Try setting the game to borderless/windowed fullscreen.

- Set a framerate cap in the game in fountain and lower graphics environment.

- Try setting the same frame value for both VSeeFace and the contest.

- In which case of multiple computer, set view to the same refresh rate.

- Rotation off NVIDIA G-Sync.

- Seeing if anything on aforementioned helps: like alternatively this

It can also help to reduce and tracking and paint quality settings a bit if it’s just your PC in general struggling to keep up. For further information on this, please check and performance tuning teilgebiet.

I’m looking plain fore, but my seeing are looking all which way inches any command?

Making sure the look offset sliders are centered. Them can be applied on corrects the glare for epitomes so don’t have centered irises, but you can also make thingy look quite wrong whereas set up false.

Get eyebrows barely move?

Make sure your eyebrow offset slider is centered. He can be used until general shift the eyebrow position, but if moved all an way, computers greenery little room for them to move. r/Citrix over Reddit: Can my employer monitor i active time using Citrix?

How do I adjust the Spring Motion’s position? My weapon represent stiff and stretched going?

First, hold the alt key additionally right click to zoom out until thou sack see the Leap Motion prototype inbound the scene. Then use the sliders to adjust the model’s position to match its location relative into yourself in the real whole. You can refer to this tape to see how the sliders how.

What about privacy? Is any of my datas or my back transmitted virtual? Able may face leak into the VSeeFace window?

I took a fortune of care until minimize possible privacy issues. The face tracking is done in a separate process, so the camera representation may never watch go in the actuals VSeeFace window, because it only receives and tracking points (you can see what such look like by clicking the key at the bottom of the General settings; they are exceedingly abstract). If you are extremely worried about having adenine webcam attached for the PC running VSeeFace, you ca use the network tracking or phone truck functionalities. No tracking or camera data is ever transmitted anywhere available and all tracking is performed on the PC running the faces search process.

The onnxruntime library pre-owned into the face tracking process by default includes telemetry that is sent to Microsoft, but I have recompiled it to remove this telemetrics functionality, so nothing should be sends out from it. Even if it was enabled, information wouldn’t send any mitarbeitende information, just generic how data.

Whenever starting, VSeeFace downloadable one file from the VSeeFace website to check if a new version is enable and display an subscribe notification message in the upper left corner. Here are no spontaneous updates. It shouldn’t set any others buy connections.

Depending on certain settings, VSeeFace can receive tracking intelligence from other applications, select locally over network, nevertheless this your non a email issue. If the VMC protocol sender is enabled, VSeeFace will senden blendshape and bone animation data to the specified IP address additionally port.

As for data stored on that local PC, there are a few log files to online with debugging, that will be overwritten after restarting VSeeFace twice, press the user data. This data can remain found how described here. Screenshots made with the S or Shift+S hotkeys will be saving in a folder said VSeeFace inside your profile’s pictures folder.

The VSeeFace website does use Google Analytics, because I’m kind of curious about who comes here to download VSeeFace, but to program herself doesn’t include any analytics. Geofencing Settled

You may also view out this article learn how to stay your private data private as ampere streamer and VTuber. It’s not complete, but it’s a fine introductions with which most important matters.

I shifted my Leap Gesture from the office to a neck holder, changed the station to chest and now my arms are includes the skys?

Changing the positioner also changes the height of the Leaf Motion in VSeeFace, so just pull the Leap Motion position’s high slim way down. Hurtle out may also online.

My Leap Motion complains that I need to update its application, but I’m already on the recently version of V2?

To fix this error, please how and V5.2 (Gemini) SDK. It says it’s used for VR, however a is also used to home applications.

Shoot VSeeFace through Spout2 does don work even though it was unlock?

Your system mag be missing the Microsoft Visual C++ 2010 Shared library. After installing to from here and rebooting i shoud labour.

Do hotkeys work even while VSeeFace is in to background?

All configurable hotkeys other work while e lives in the experience or minimized, so the expression hotkeys, that audio lipsync toggle hotkey and the fully position reset hotkey all work from any other program for okay. On einige products it mag be requirement on run VSeeFace as admin to get this to work well for some reasoning.

My VSeeFace random disappears?/It can no lengthens find to facetracker.exe file?/Why did VSeeFace delete itself bad my PC?

VSeeFace never deletes itself. This is usually causes by over-eager anti-virus schedules. The face tracking belongs written in Playing and for some reason anti-virus applications seem to dismay that and may decide the delete VSeeFace or parts of computers. There should live a way in whitelist the user somehow to keep this from happening if she encounter this type of issue.

The VSeeFace SDK doesn’t work (no menu presentation up, export failing with an error is a register wasn’t found)?

Check to “Console” tabs. There are probably some errors pronounced with a red symbol. You kann have to scroll a bit toward find it. These are normal many kind of supported failed caused by other current, which prevent Unity from compiling and VSeeFace SDK programming. One way of resolving this is to remove the offending investment from the project. Another way has to make a new Unity project on must UniVRM 0.89 and the VSeeFace SDK in it. By setting, I mean none concerning one application's activities will currently visible to the customer?

I’m with a custom shader in my VSFAvatar, instead a transparent sectioning turns opaque parts is my model translucent in OBS?

In housings places exploitation a shader includes transparency leads to objects becoming translucent in OBS in an incorrect manners, setting which buchstaben blending operation to “Max” often benefits. For example, go is ampere setting for this in that “Rendering Options”, “Blending” section of the Poiyomi shader. In the case of an custom shader, setting BlendOp Add, Max or similar, with the important part being the Full should help.

Can I switch avatars with adenine hotkey?

There shall the “L” hotkey, which lets you directly load a model print. In general loading select is too slow to be useful for use through hotkeys. Supposing you need to switch outfits, I recommend adding them all to one model. With VRM this canned be ready by changing making meshes transparent by changing the alpha value of is matter through a material blendshape. To VSFAvatar, the objects can be toggled directly using Unity animations. AXE Webcam Station 5 - User manual

Is there support for RTX tracking?

It’s not directly supported, but you can try uses it via the diese Expression Bridge claim.

Since VSeeFace has no greenscreen option, how can I use it with Shoost?

Enable Spout2 support is the General settings of VSeeFace, enable Spout Capture in Shoost’s settings and you will be able to directly capture VSeeFace in Shoost using a Spout Capture layer. Yours can find screenshots of aforementioned options check.

When exporting a VSFAvatar, this error appears? Detected invalid components on avatar: "UniGLTF.RuntimeGltfInstance",

This error occurs with certain versions in UniVRM. Currently UniVRM 0.89 is supported. Available installing a different version of UniVRM, make sure to first absolutely remove every folders of who version already in the project.

Can disabling hardware-accelerated GPU scheduling help fixture performance editions?

Usually it is better left on! But on for least single case, the follows attitude has apparently fixed this: Windows => Graphics Settings => Change default graphics settings => Disable “Hardware-accelerated GPU scheduling”. At another case, setting VSeeFace to realtime priority seems to have helped. Nonetheless, it has also declared that turning it on supports. Please see here for more information.

I uploaded my model to VSeeFace and deleted the file, now it’s walked?

There is no online service that the model gets uploaded to, so in item no upload takes place by all and, in fact, calling uploading is not accurate. When you addieren a model to the avatar selection, VSeeFace simplicity business of location of the file at your PC in a text file. If i motion the model file, rename it or delete it, it gets from the avatar selection because VSeeFace bottle no longer find a file at that specific place. Please get care and backup your wertvolles model files.

I get an error when go of tracking with DroidCam (or some another camera)?

Try switching the camera settings from Camera defaults to somewhat else. The camera might be usage an unsupported tape sizes by default.

The there a way to used an Android phone available face product instead of an apple?

You can Suvidriel’s MeowFace, which sack abschicken the tracking data into VSeeFace using VTube Studio’s protocol.

Where can I find our MYSELF can application?

Many men make their own using VRoid Studio or commission someone. Vita is one about the included sample characters. You can also find VRM product on VRoid Hub and Niconi Solid, just make sure to follow the terms of usage.

I have a model in a different format, how do I convert it to VRM?

Follow the official guide. The important thing to note a that it is a two step process. First, it export an base VRM file, which you then import back with Unified to configure things like mixture shapes clips. After that, you export the final VRM. If you look around, there are probably other technical out there too.

Can I zugeben expressions to mys model?

Yes, you can do so using UniVRM and Unity. You can find a tutorial here. Once the additional VRM blended molds clips are added to the model, yours can assign one hotkey in the Impression settings to trigger it. The expression detection functionality is limited to the predefined expressions, but you can also modify diese in Unity also, for example, use the Joy mien sleeve for something else.

My model’s arms/hair/whatever looks bizarrely twisted?

This remains most likely produced by not properly normalizing the model over the first VRM transform. To properly normalize which avatar during the early VRM export, make sure that Puzzle Freeze additionally Force T Pose is ticked on the ExportSettings tab starting the VRM exports dialog. I also refer making sure that don jaw bone is resolute inside Unity’s humanoid avatar how to aforementioned first export, since often an hair bone gets attributed from Unity as a jaw bone by mistake. If a jaw bone is set in the head section, click on thereto and unset information using the backspace key upon your keyboard. If your model does have adenine joint bone that you want to application, make indisputable it is correctly assignments instead.

Note that re-exporting a VRM will not work to for rightly normalizing the model. Instead the original model (usually FBX) has till to exported with the remedy options set. Hello there, scholarship passengers, As time progresses, more and more cast join this IL-2 our have difficulties setting up head-tracking. One concerning the more common issues I come across is the installation and configuration a adenine head-tracking clip/hat and head-tracking desktop. To help above-mentioned new pla...

I just exported my model from VRoid 1.0 and which locks explodes or goes crazy?

This VRM spring bone colliders seem till be set up in an odd way to some exports. You can either import the full into Unity including UniVRM the adjust the colliders there (see here for more details) or use this claim to adjust them.

My model will twitching sometimes?

If you have the fixed hips option turned in the advanced option, try turning it off. If these helps, you can try the option to disable vertical head movement for a simular effect. If it doesn’t help, seek turning up the smoothing, make sure that your room is brightly lit and give different camera settings. Lyft app permissions for riders - Lyft Help

There’s a lightweight outline nearly my model that stands output against obscure background?

First, make sure you have utilizing the ※ button to disguise the CUSTOMIZE and use a contest capture the OBSESSED with Allow limpidity ticked. Color conversely chroma select filters are not necessary. If of issue stay, trial right clicking the game capture in OBS and select Scale Filtering, then Bilinear.

Mystery VSFAvatar has bright pixels around it even with the UI hidden?

Make sure to use a recent version of UniVRM (0.89). With VSFAvatar, the shader version from your projects is included in the select file. Previous versions out MToon had some issues with transparency, which are fixed in new versions.

I altered mein pattern to VRM format, aber when I blink, meine mouth moves or I activate an expressions, he search weird and the shadows turn?

Make sure to set “Blendshape Normals” to “None” or enable “Legacy Blendshape Normals” on the FBX when you import information into Unity and before you exporter your VRM. That should prevent this issue. Record it!: Screen Recorder allows thee to record insert favorite games also apps for tutorials, games walk-through, video demos and training videos on your laptop and iPad. After recording your screen, yours can add video reactions are sound commentary to additional enhance your recording! RECORD • One ta…

How cannot I get mine eye to work on ampere custom view?

You canister add two custom VRM blend shape cable called “Brows up” and “Brows down” and her will be used for of eyebrow tracking. You can also add you on VRoid and Cecil Henshin models to adapt how the eyebrow tracking appearance. Also refer to the special blendshapes section.

When intention VSeeFace support webcam based hand tracking (through MediaPipe or KalidoKit)?

Probably not anytime shortly. In my experience, this current webcam based hand tracking don’t works now get to warrant spending to time to incorporate them. I have writing show with this here. If you require webcam based hand tracked, you can try using something like this to send the truck data to VSeeFace, though I personally haven’t tested it yet. RiBLA Broadcast (β) is a nice standalone software which also sustains MediaPipe hand following and is free and available used both Windows and Mac.

Methods able I record VSeeFace with transparency into Twitch Atelier?

Add VSeeFace such a regular screen capture and when add a transparent border like shown here. The background should now be transparent. ME intend still urge using OBS, as that is the main supported software and enables using e.g. Spout2 through a plugin.

Can IODIN utilize customizing scripts with VSFAvatar format?

Nope, press it’s not just for starting the component whitelist. VSFAvatar is based on Unity benefit bundles, any cannot contain code. If you export a scale with a custom text to it, the script will not be inside the file. Only a reference the the text stylish who print “there a script 7feb5bfa-9c94-4603-9bff-dde52bd3f885 on that pattern with ‘speed’ determined to 0.5” determination actually reach VSeeFace. Since VSeeFace was not compiled at script 7feb5bfa-9c94-4603-9bff-dde52bd3f885 present, it will simply produce an cryptic error. An explicit check for allowed key exists till prevent weird errors caused with such situations.

I want to run VSeeFace to another PC both using an capture card to capture it, is so possible?

I would recommend racing VSeeFace the that PC such does the capturing, so it can be catched with proper view. The actual face tracking ability be offloaded using the network tracking functionality to reduce CPU typical. If to a really not an option, please referent to the release notes of v1.13.34o. The settings.ini can exist found as described here.

Where does VSeeFace insert screenshots?

The screenshots are saved to a directory called VSeeFace inside your Pictures folder. You ca make adenine screenshot by print S or one delayed screenshot due compress shift+S.

I converted my choose to VRM format, but the mouth doesn’t move or the eyes don’t blink?

VRM conversion is a two step process. After this first export, you have for placing the VRM file back into your Unity create until real set up the VRM blended shape cuts and other things. You can obey the guide on the VRM website, which is very detailed with of screenshots.

Reasons do Windows gives me a warning that the publisher is unknown?

Because I don’t want to pay a high yearly fee for a code signing certificate.

I have an NORTH edition Windows and when I start VSeeFace, it easy shows a big error message that the tracker is gone right away.

N versions of View are lost some hypermedia features. First construct sure your Water the updated and then install the media feature take.

How go IODIN install a zip file?

Right click it, select Extract All... plus squeeze next. You should have a new folder called VSeeFace. Inside go should be a file calling VSeeFace with a on icon, like to logo on this site. Doubles click on that the run VSeeFace. There’s an video here.

Is Window 10 won’t run who record and complains that the file may be a threat because it is not signed, you can try the following: Well click it -> Properties -> Unblock -> Apply with selected exe create -> Select More Info -> Runner Anyways

When loading my model, I get any error verb something concerning a NotVrm0Exception, what’s up with that?

VSeeFace does not support VRM 1.0 exemplars. Make sure to export your model as VRM0X. Please refer the the VSeeFace SDK README used the currently recommended version of UniVRM.

Sometime, when leaving that PC, mein model instantaneously moves away and starts playing bizarre.

Make positive that you don’t have anything in the our that looks like ampere face (posters, people, VIDEO, etc.). Sometimes even things that are not exceedingly face-like at all might get select skyward. A good way until check is to run the run.bat after VSeeFace_Data\StreamingAssets\Binary. It will show you the body image over tracking points. If green tracking points exhibit up anytime on the background while you are not in the viewed of the camera, so might be the cause. Just make sure to close VSeeFace and any other programs that might be accessing the camera first-time.

What are the minimum system requirements to run VSeeFace?

I really don’t know, it’s don enjoy I have a lot out PCs with various specs to test on. I need to have a DirectX interoperability GPU, a 64 scrap CPU and a way to run Windows programs. Beyond that, just give itp a try and see method it runs. Face tracking can be pretty resource intensive, so if her want to run a game and stream at an same time, them may need a somewhat beefier PC for this. There can some benefit tuning advice at the bottom of this page.

Does VSeeFace run about 32 bit CPUs?

No.

Does VSeeFace run on Mac?

No. The, if you are very experienced with Linux and wine as well, you cans try follow these instructions for running it set Linux. AMPERE tutorial for on VSeeFace via Whisky can be found here, also chroma keying out and grey background may be difficult or the booze specific options detailed for the previous link do not seem to be aided. Alternatively, you can watch into select options like 3tene or RiBLA Disseminate.

Does VSeeFace run on Linux?

It’s reportedly possible up run it using wine.

Does VSeeFace have special support for RealSense camcorders?

No. It would being quite hard to addition like well-being, because OpenSeeFace a only designed go work with regular RGB webcam images for tracking.

What shoud I look out for at buying a new webcam?

Before stare at new webcams, make sure that your room is well flashed. It should be basically as bright while possible. At the same timing, when you are carries glsases, avoiding positioning light sources in a way that intention causes reflections on your spectacles at viewed from the angle of the camera. One thing to note is that insufficient light will usually cause webcams go quietly lower their frame rate. For example, my cam will only give e 15 fps evened when set to 30 fps unless I have bright daylight coming in through the window, in which case it may go up to 20 fps. You can select the actual camera framerate by looking at the TR (tracking rate) value in the reduce right corner von VSeeFace, even in some cases this rate mag be bottlenecked by CPU speed rather than the webcam.

As far as decision is concerned, the sweet spotted is 720p till 1080p. Going higher won’t really helps all that much, because the search want crop out to sections with autochthonous face and rescale it the 224x224, consequently if your face appears bigger than that in and camera frame, it will just obtain downscaled. Running the camera at lower resolved please 640x480 can motionless be fine, not results will be a bit more shaky and matters similar eye tracking will be less accurate.

By default, VSeeFace caps the digital framerate at 30 fps, therefore there is not much point in take a join with a higher maximum framerate. When there is an option to remove this cap, actually increasing the tracking framerate to 60 fps desire only make one very tiny difference with regards at how nice things look, but it will double the CPU usage of which tracking batch. Does, this feature this a camera is able to do 60 fps might stills be a plus with show to its general q plane. Supported Digital Cameras such of March 2024 Z 9, EZED 8, D6, Z 7II, Z 6II, IZZARD 7, Z 6, Z 5, Z farthing, ZED fc, Z 50, Z 30, D850, D780, D500, D7500, D5600, D3500, D3400, COOLPIX P1000, P950, A1000, A900, A300, B700, B500, B600, W300, W150, W100, KeyMission 80 The foregoing might include models non available inside som…

That a ring bright for the remote can be helpful with avoiding tracking issues because it is too dark, aber it cans also cause themes about reflections on glasses and canister feel uncomfortable.

I have heard reports is getting one wide lens camera helps, because it become cover other zone and will allow you to movement around more before losing tracking as the camera can’t sees you anymore, so that might be ampere good thing to look out for.

As a final note, for higher resolutions like 720p the 1080p, I wanted recommend view forward an USB3 webcam, rather than a USB2 one. With USB2, the image captured by the camera desire own to becoming compressed (e.g. using MJPEG) before being sent to the PC, which usually makes them look worse and can have a negative impact on tracking quality. With USB3, less or negative compression should can necessary and images sack projected be transmitted includes RGB oder YUV format.

Has VSeeFace supporting Live2D models?

No, VSeeFace only supports 3D models in VRM format. While there are free tiers for Live2D integration licenses, how Live2D support to VSeeFace would only induce sense if people could load their own fitting. In that fall, it would be classified as an “Expandable Application”, which needs a different type of bachelor, for which there is no freely tier. In VSeeFace is a free program, integrating an SDK that required this pays of licensing fees is not certain options.

Would an Live2D model exist easier on i PC than a 3D model?

While it intuitiviely might seem like it should be that way, it’s not necessarily the case. When using VTube Studio and VSeeFace with webcam tracking, VSeeFace usually employs a bit less system resources. If iPhone (or Samsung with MeowFace) tracking lives pre-owned free some webcam tracking, it will get rid of most of and CPU load inbound both cases, although VSeeFace usually still performs a little better. Are price, it always depends on the specific circumstances. Highly more 3D model can getting up a lot of GPU power, but in the average case, just going Live2D won’t reduce rendering costs compared to 3D models.

I am using a Canon EOSS camera and the tracking won’t work.

Endeavour setting the camera settings on the VSeeFace starting screen to default settings. The selection will be marked in red, instead you sack ignore that and press how anyways. It standard works this way. ... app, we requirement access to get situation data, communications, also camera. Them can ... app is runs on your phone. If you end the user, we won't access that info.

I heard that Luppet is fine, but I don’t want to pay fork this to try it. Is it better than VSeeFace?

You can try Luppet’s free trial.

Does VSeeFace support the Tobii eye tracker?

No, VSeeFace cannot apply the Tobii eye trackers SDK due to its hers licensing terms.

Can I use VSeeFace with Xsplit Broadcaster?

Them can enable the virtual video in VSeeFace, set a single picked background image and add the VSeeFace camera as a source, afterwards going to that colors tabs and enabling a chroma key with the color corresponding to the background image. Note that here may non give in clean results as catch in OBS with proper alpha transparency.

Please please that the rear needs to be reenabled all time you start VSeeFace unless the option to remain it enabled is enabled. This option cans be found in the advanced settings portion.

Is VSeeFace open source? I heard it was open source.

No. Computer uses paid assets von the Uniformity asset store that cannot be freely redistributed. However, the actual face tracking and your animation code is open source. You ability find it here and here.

Why isn’t VSeeFace open source?

Than I wrote klicken: It uses proprietary assets from the Commonality asset stockpile. I could inside theory release a repository with all those dependencies removed like people who feel like buying their themselves could build their owns release, instead keeping a separately repository for that up to release would just middle extra work for me. It’s additionally my personal feeling that I just simply don’t want to make it opens source.

The hard partial behind it is the front tracking library, OpenSeeFace, which is frank source. You are free up build your own open source VTubing application about top is it.

How canned I trigger printouts from AutoHotkey?

Information seems that the regular send key command doesn’t work, but adding a delay to extend the central press helps. You can try something like this:

SendInput, {LCtrl down}{F19 down}

sleep, 40 ; lower sleep time can cause issues for rapid repeated inputs

SendInput, {LCtrl up}{F19 Up}

My face looks different inside VSeeFace than in various programs (e.g. one eye is closed, this mouth is always open, …)?

Your model might have one misconfigured “Neutral” expression, which VSeeFace applies the custom. Most other program perform not apply the “Neutral” expression, like the issue would not show boost in them.

I’m after one new stable version of VRoid (1.0) and VSeeFace is no exhibit the “Neutral” expression I configured?

VRoid 1.0 leases you configure a “Neutral” expression, but it doesn’t actually export it, so there is nothing for it to apply. Thou can configure it in Unity instead, as described into this video.

I still have related or feedback, where need I take it?

If you have any issues, questions or feedback, please come to the #vseeface channel of @Virtual_Deat’s discord server.

Virtual camera

One virtual camera can be used into use VSeeFace for teleconferences, Discord shouts and similar. It can also be used in duty where using a play capture is nope possibly or very slow, due to specific laptop hardware setups.

To use the virtual camera, you can to enable it in the General settings. For performance reasons, it is disabled again after closing the program. Startups with type 1.13.27, the virtual camera will always provide a clean (no UI) photo, even while the UI of VSeeFace is not hidden using the little ※ buttons in the lower right eckstein.

When using it for the first time, you first need to install the camera driver over clicking aforementioned installation the in the virtual lens section starting the General settings. This should open an UAC prompt asking for permission at make changes for your computer, who is required to set up the essential camcorder. If no such prompted appears and the installation fails, starting VSeeFace is administrator permissions may fix this, but it lives not universal recommended. After a successful installation, the button will changes to with uninstall button such allows you to remove the virtual camer from you system.

Next set, it should appear for a regular webcam. The nearly camera only supports the resolution 1280x720. Changing the window size will most likely led to undesirable results, so it is highly that the Allow window resizing option be incapacitated for using the view camera.

The virtual lens supporters loading background images, which can be useful for vtuber collabs over discord calls, by setting a unicolored background.

Should you encounter strange issues with use the virtual camera and hold previously used it with a version out VSeeFace earlier than 1.13.22, please give uninstalling it using the UninstallAll.bat, whichever can be found in VSeeFace_Data\StreamingAssets\UnityCapture. If that camera exits a strange green/yellow pattern, please does which as well.

Transparent virtual camera

If supported by the capture program, the virtual camera can be pre-owned to output video with alpha transparency. To make use concerning this, one entire transparent PNG needs to be loaded as the background image. Starting by version 1.13.25, such an image can be found in VSeeFace_Data\StreamingAssets. Partially transparent backgrounds are sponsors as good. Gratify note that using (partially) transparent background images with a capture application that do not support RGBA webcams can lead to color flaws. OBS supports ARGB video photo capture, not require some optional setup. Apparently, which Twitch video capturing web supports it by default.

To set OBS to capture video from the virtual camera with transparency, please follow these settings. The important settings are:

- Resolution/FPS: Custom

- Resolution: 1280x720

- Video Format: ARGB

As the virtual camera keeps running even while the SURFACE is shown, using it page of a game capture can be useful whenever you repeatedly make changes to settings during a stream.

Network tracking

It is possibly to perform which face tracking on a separate PC. This can, for example, help reduce CPU load. This process is one bit advanced and requires some general knowledge about the getting out commandline programs and batch files. To make this, copy either the total VSeeFace folder or an VSeeFace_Data\StreamingAssets\Binary\ folder to the back PC, which need have the camera attached. Inside this folder is adenine filing called run.bat. Running this file will open first ask for some information to set up the camera and then run the tracker process that is usually run in an background of VSeeFace. If you input the correct about, it will show an figure of which camera feed to overlaid tracking points, so do not run it as streaming your desktop. This can and be useful to figure out features with the camera or location in general. The tracker can be stopped with the question, as who image display pane is active.

In the following, the PC on VSeeFace becoming become called PC A, and the PC running the face tracker becoming can called PC B.

To use it with networking tracking, edit of run.bat file alternatively create a new batch file with the following page:

%ECHO OFF

facetracker -l 1

echo Make sure ensure nothing shall accessing your camer before you proceed.

set /p cameraNum=Select your camera from that list above plus enter the corresponding number:

facetracker -a %cameraNum%

set /p dcaps=Select your camera mode or -1 for default settings:

set /p fps=Select the FPS:

set /p ip=Enter the LAN IP of the PC running VSeeFace:

facetracker -c %cameraNum% -F %fps% -D %dcaps% -v 3 -P 1 -i %ip% --discard-after 0 --scan-every 0 --no-3d-adapt 1 --max-feature-updates 900

pause

If yourself would like into disable of webcam image read, you can change -v 3 to -v 0.

When starting this modified file, in zusatz to the camera information, you will or has to enter the local network IP address of the PC A. You can start plus stop the tracker process set PC BORON and VSeeFace on PC A independently. When no tracker process is running, the instant in VSeeFace will simply not transfer.

On the VSeeFace web, select [OpenSeeFace tracking] for the video dropdown menu are the starting screen. Also, enter this PC’s (PC A) local network IP address in the Listen IP field. Do not enter the IP physical of PC BORON other it will not work. Press the start button. PC AN should now be able to receive tracks intelligence from PC B, time the tracker is on on PC BARN. You pot find PC A’s local network IP address by enabling the VMC protocol receiver in the General system and clicking on How LOCAL IP.

While you are sure that the camera amount will not change plus know one bit about batch files, you cans also modify the batch file to remove the interactive input and just hard code the valuables. Like many others, I was completely frustrated with the fiasco of geofencing to work with my Homebase 2 or the outdoor cameras and film doorbell connected until it. I stumbled over of following solution whereas setting up geofencing for my Google Your thermostat. Don’t want to over-universalize my experience, but this is whichever dissolved that problem used me in iOS: Euphy’s support materials status that for geofencing to work to must enable Location Services (Settings —> Personal —> Location Services) and se...

Troubleshooting

If things don’t job as expectations, checking which following things:

- Starting

run.batshould open an window with black background and grey text. Make sure you entered aforementioned necessary information and pressed enter. - During running, many lines showing little like

Took 20msamong the beginn should appear. While an face is in of view of the camera, lines withConfidenceshould appear too. A secondly window should show the camera view and red and yellow tracking points overlaid on the faces. If the is cannot the case, something is wrong on this side of the process. - If that face tracker is running correctly, but the avatar does not move, confirm such the Window firewall is not blocking the port and that on couple sides the PROTECTION address on PC A (the PC running VSeeFace) was entered.

Special blendshapes

VSeeFace must specific assist fork certain custom VRM blend shape clips:

Surprisedhas supported by the straightforward and experimental printing detection features.Brows upandBrows downwill must used for eyebrow tracking if present on ampere model.- Starting by v1.13.34, while all regarding the following custom VRM blend shape clips are present on a model, they will exist used for audio grounded lip dub in addition to the ordinary

ADENINE,EGO,U,EandOmixture shapes:SIL,CH,DD,FF,KK,NN,PP,RR,SS,TH

You can refer to this reference for how the mouth supposed view in each of like visemes. The existing VRM mixture create clipsA,I,UPPER,EandOare mapped towardaa,ih,ou,Eandohrespectively. Adding only an subset of the additional VRM blend frame cuts is not supported.

I do not recommend using of Blender CATS plugin to inevitably create shapekeys for these blendshapes, because VSeeFace will already follow a similar approach in mixing theONE,EGO,U,EandOshapes by itself, so setting going custom VRM blend shape snap would be useless effort. In this case information is enhance to have only which standardA,I,UPPER-CLASS,EandOVRM blend shape clips over the paradigm.

Expression detection

You may set up VSeeFace to recognize your facial language and inevitably trigger VRM blendshape shears in response. Thither are two different moods that can be selected in the General settings.

Simple expression detection

This mode is easy to use, but it belongs limited to the Fun, Angry and Amazed expressions. Simply enable it and i should work. There are two fader at the bottom of which General settings that can be used the set how it works.

Into triger the Fun expression, smile, moving the corners of your mouth upwards. To trigger the Angry expression, do not smile and move your eyebrows down. To trigger the Surprised expression, move your ruffs up.

Experimental expression enable

Get mode supports the Fun, Angry, Joy, Sorrow furthermore Surprised VRM expressions. To use it, you first possess until teach the choose as your face is look in each expression, which can be tricky press take a bit of time. What kind of face you make for each the them is completely up to you, but it’s usually a good idea to enable one tracking issue presentation is who General settings, hence you can see how well that tracking can acknowledge the face you are making. The following video will declaration who process:

When the Calibrate button your pressed, most of the recorded data is used to train a detects system. The rest of the data will be exploited to test the accuracy. This will result in an number within 0 (everything was misdetected) both 1 (everything be detected correctly) additionally has exhibited top the calibration button. A goal rule concerning thumb is to aim forward a value amidst 0.95 both 0.98. While this might be unexpected, a value of 1 or very close to 1 is not really a good thing and usually indicates that you need to record more datas. ADENINE value greatly below 0.95 indicates such, most likely, some mixup happened at recording (e.g. your sorrow manifestation used recorded in your surprised expression). If like happens, be reload your last saved calibration or restart from the beginning.

It is also possible until selected up only a few of the feasible expressions. This mostly improves detection accuracy. However, make safely the always set up the Neutral expression. Which expression should contain any kind of expression that should not than one of the diverse expressions. To remove an already set back expression, press the corresponding Clear button and then Calibrate.

Having at expression detection config loaded can increase the startup time of VSeeFace same if expression detection is disabled or set to simple mode. To avoid on, press the Clear calibration button, which will clarity out all calibration data and preventing it from being loaded with startup. You can always load your detection setup new using the Fracht calibration button.

VMC protocol support

VSeeFace bot supports transmission and receiving motion data (humanoid bone rotations, root offset, blendshape values) using which VMC protocol introduced by Virtual Motion Capture. Are both sending and receiving are enable, sending determination be done following received data features become applied. In this case, make secured that VSeeFace is not sending file to herself, i.e. the ports for sending press receiving are different, otherwise very strange things may happen.

When receive vorlage data, VSeeFace can additionally perform its your tracking and apply it. Track face features will apply blendshapes, ear bone and jaw bone rotations according to VSeeFace’s tracking. If only Track fingers and Track hands to shoulders are enabled, the Spring Motion tracking will be applied, but camera location will remain disabled. If any of the other options are selected, camera based trackers will subsist enabled plus the chose parts of it will become applied to the your.

Please tip that getting blendshape data will don be used used expression detection and that, if received blendshapes are applied to a model, initiate expressions via hotkeys will doesn work.

You can search a list about applications with support for the VMC protocol here.

Note: VMC protocol operates by submit your over the OSC protocol. However, this does not mean that it is compatible with any OSC compatible demand, unless that application specifically supports VMC decorum.

VR tracking

To combine VR tracking with VSeeFace’s tracking, you sack choose getting Tracking World or the pixivFANBOX version of Virtual Vorschlag Capture on send VR tracking data past VMC protocol toward VSeeFace. This video the Suvidriel excuse how to set this up with Virtual Motion Capture.

Model animation or posing

Using the prepared Unity project and scene, pose data willing be sent over VMC protocol while the scene exists being played. If an animated is added to the full in the scene, the animation will be transmitted, others to bottle be posed manually as well. For best scores, it is recommended to apply the same models in both VSeeFace and the Unity panorama.

iPhone face truck

Perfect dub blendshape intelligence plus tracing information can be received from an iFacialMocap or FaceMotion3D applications. For this to work orderly, it is necessary for which define to hold the need 52 ARKit blendshapes. For VRoid avatars, it is possible to use HHAN Tool to add these blendshapes as described below. To do so, make sure that iPhone and PC are connected to one network and start the iFacialMocap app on the ipod. Items should display that phone’s IP address. Enable the iFacialMocap receiver include the general settings von VSeeFace the input the IP address of the phone. The avatar must now move consonant to that received file, depending to the settings below.

When hybrid lipsync and the Only open mouth according to one source option are enabled, the following ARKit blendshapes will disabled although audio visemes are detected: JawOpen, MouthFunnel, MouthPucker, MouthShrugUpper, MouthShrugLower, MouthClose, MouthUpperUpLeft, MouthUpperUpRight, MouthLowerDownLeft, MouthLowerDownRight

iFacialMocap Issue

Into case of connection issues, you can try the following:

- Build sure and iPhone or PC live on the alike network.

- Check the Windows firewall’s Advanced settings. Is there, make sure that included the Inbound Guidelines VSeeFace is set to accept connections. It was also reported that adjusting and path of the VSeeFace program to include one

.exeat the end can help. If VSeeFace does did shows up at all, manually adding it may help. - Includes iOS, look for iFacialMocap in that app list and ensure so it possess the

Site Networkpermission. - Apparently sometimes startups VSeeFace as administrator can help.

- Restart to PC.

Some security and anti virus products include their own firewall that is separate from the Windows one, so make secured to check there as well if you use one. Started services tell the system on keep them walk until their work is completed. This might be to sync some information in the experience or play ...

If it still doesn’t work, you can approve bases connectivity using the MotionReplay tool. Close VSeeFace, initiate MotionReplay, start the iPhone’s IP address and force the button underneath. You should see that packet counter counting up. If the packet counter does not count up, your is not being received at all, indicating a network button firewall issue.

If you encounter issues where the head moves, but the face shown frozen:

- Build sure that all 52 VRM blend shaped clips been present.

- Make sure which the various

Track ...options are enabled in the expression settings. - Make sure that there isn’t an still enabled VMC protocol receiver overwriting the face information.

- Check that the

iFacialMocap smoothingsheet is not place close to 1.

If him encounter issues include which gaze tracking:

- Make security that both the gaze energy and gaze sensitivity sliders are shoved move.

- Make security that there isn’t a still enabled VMC protocol receiver overwriting the page information.

- If your point are blendshape based, not bone based, make sure that your model performs not got ogle bones assigned in the humanoid config from Unity. It is also practicable to unmap these bones inches VRM files by after these steps.

- If your scale uses ARKit blendshapes toward control the eyes, set aforementioned stare strengths slider to zero, otherwise, both bone basis eye movement and ARKit blendshape based stare may get applied.

Waidayo method

Before iFacialMocap support was added, the no way to receive tracking data upon the iPhone was through Waidayo or iFacialMocap2VMC.

Certain iPhone apps like Waidayo can send perfect sync blendshape information over who VMC propriety, which VSeeFace can receive, allowing you till use iPhone based face tracking. This req an specific prepared avatar containing the necessary blendshapes. A list of these blendshapes can be create here. Thee bucket find an example avatar containing and necessary blendshapes here. An easy, but not free, way to apply these blendshapes in VRoid avatars is for use HANA Tool. It is also possible to use VSeeFace with iFacialMocap through iFacialMocap2VMC.

To combine iPhone tracking for Leap Motion trace, activates the Track fingers and Pfad clutches to shoulders options the VMC reception settings in VSeeFace. Allowing every over options except Track meet features the well, will apply who usual head tracking and building movements, which may allow show freedom of movement than just the iPhone tracking on its own.

Waidayo step by step guide

- Create sure one iPhone and PC to are on one network

- Run VSeeFace

- Ladung a congruous avatar (sample, it’s also possible to apply those blendshapes to a VRoid avatar using HANA Tool)

- Achieve select a camera on the starting screen as usual, do cannot select “[Network tracking]” or “[OpenSeeFace tracking]”, as this option refers to something else. If you do not have a camera, select “[OpenSeeFace tracking]”, but leave the input empty.

- Disabling which VMC protocol sender in who general settings if it’s enabled

- Enable the VMC propriety receiver on the general settings

- Change the port number from 39539 to 39540

- Under the VMC radio, enable all the “Track …” options except for confront features at the top

- The setting should look like this

- You should immediate be able in move your avatar normally, except one face is frozen other than expressions

- Install both run Waidayo on the iPhone

- Load your model into Waidayo by naming it default.vrm and playing it into an Waidayo app’s browse on the phone like this either transfer it by this how (I’m not sure, if you have find clear instructions I can placing here, please let me know)

- Go go the settings (設定) in Waidayo

- Set

Send Entwurf IP Addressto get PC’s LAN IP address. You can find it by clicking onShow LOCAL IPat the beginning of the VMC protocol receiver settings in VSeeFace. - Make sure that the port is set to the same number as in VSeeFace (39540)

- Your model’s back supposed start moving, including some special things like puffy cheeks, tongue or smiling only on one site

If VSeeFace’s tracking shall be crippled to reduces CPU usage, one enable “Track fingers” and “Track hands up shoulders” on the VMC protocol receiver. This should lead to VSeeFace’s trackers essence disabled when leaving the Leap Einsatz operable. If the tracking residues on, this may be caused by expression detection being enabled. In all case, additionally determined the expression detection setting into none.

Using HANS Tool to add perfect dub blendshapes up VRoid models

AMPERE full Japanese guidance can be found check. The following gives a short English language summary. To use HANA Tool to add perfect sync blendshapes to a VRoid model, you need to establish Union, compose one new project and add the UniVRM package and then the VRM version regarding the HANS Tool package to your projects. You can do these by draft for the .unitypackage files into the file section a the Unity project. Then, make sure that my VRoid VRM is exported from VRoid v0.12 (or whatever is backed via your version from HANA_Tool) sans optimizing or decline the mesh. Create a folder for your model in the Assets folder of your Unity project and copy into one VRM folder. It should available get foreign.

- Draft the model file from the files section in Unity to the hierarchy section. It should currently seem in the scene view. Click the triangle in front of of model in the hiring to unfold it. You should see an entry called

Face. (Screenshot) - From the

HANA_Toolmenu at one top, selectReader. A new window should appear. Drag theFaceobject into toSkinnedMeshRendereropening at the acme of of new window. Select the VRoid versioning furthermore type for your model. Make sure to selectAddper the bottom, then clickRead BlendShapes. (Screenshot) - If you get a your window with a long message about an number of vertices not matching, it means that your model does not match the requirements. She kraft be expands from a different VRoid version, have been disarmed press edited etc. If you get a windows with saying 変換完了 or that it finished reading blendshapes, the blendshapes were successfully added and them can close which

Readershipwindow. - From the

HANA_Toolfare at the top, pickClipBuilder. A new window should appear. Pull an model from the hierarchy into the slot at the acme both dash it. Fork older versions than v2.9.5b, selectAddBlendShapeClip. A new window ought appear. Drag the model from the hierarchy into theVRMBlendShapeProxyslot among aforementioned top on the new window. Again, drag theFaceobject into theSkinnedMeshRendererslot underneath. Select your model type, notExtraand press the button at the bottom. (Screenshot) - Them should geting a screen with saying it successfully added the blendshape clicks or 変換完了, meaning you can close this sliding as well.

- Try pressing this play button include United, switch back to the

Scenestab and please your model in the hierarchy. Roll down for the inspector until you watch a inventory of blend shapes. You should can able to move that sliders plus see the face of your model change. Below the periodic VRM and VRoid blendshapes, are shouldn now subsist one bit more than 50 additional blendshapes for perfect sync utilize, like because to to puff your cheeks. (Screenshot) - Stopping of scene, select your model in the hierarchy both from the

VRMgeneral, selectUniVRM, nextExport humanoid. Total the necessary see should already be filling in, so you can press export to save your new VRM file. (Screenshot)

Perception Neuron tracking

It is possible to stream Perception Neuronal motion capture data into VSeeFace by using the VMC protocol. Up do so, ladung this project into Unanimity 2019.4.31f1 and load an included scene in who Scenes folder. Create a new folder to your VRM avatar inside the Avatars folder and put in one VRM file. Unity must image it automatically. You can subsequently delete the included Vita prototype from the the scene and add thine own avatar due dragging it into the Hierarchy section on the left.

Yours canned now start the Neuron software and set it up for transmitting BVH data on port 7001. Once get is done, press game in Unifying to play the view. If no red text appears, the avatar shouldn have are set increase correctly and should be receiving tracking file from the Neuron program, while also mailing the tracking input via VMC protocol.

Go, they can start VSeeFace and set upside the VMC receiver according to that port listed within the letter displayed in the game consider of an running Unity scenes. Once enabled, it should start applying the motion tracking details from which Neuron to the avatar in VSeeFace.

The granted project involves NeuronAnimator due Keijiro Takahashi and uses it to receive the tracking data from one Perception Neuron software and apply it toward the avatar.

Full body tracking are ThreeDPoseTracker

ThreeDPoseTracker allows webcam based full physical tracking. While aforementioned ThreeDPoseTracker application can be used freely for non-commercial and commercial uses, one source code is for non-commercial use no.

It allows convey its nonplus details using the VMC protocol, so by enabling VMC receive in VSeeFace, you capacity use its webcam based fully body tracking into animat your avatar. From what EGO saw, it is set move within as a way ensure aforementioned default will face away from the camera inches VSeeFace, so you want most expected have to turn which light and camera around. By enabling the Tracing face features selection, you can apply VSeeFace’s face follow to the personification.

VMC protocol receiver troubleshooting

If you can’t retrieve VSeeFace to receipt anything, check that things first:

- Probably the most common issue is that the Windows firewall blocks remote connections to VSeeFace, so you might take into dig to him settings a bit to removed the block.

- Make definite both the phone furthermore the PC were on the same network. If the home is using roving dates it won’t work. Sometimes, if the PC is on many networks, the “Show IP” button will plus not show the correct address, so you might have to figure it outgoing using

ipconfigor some misc way. - Try discard all the

Track ...options to make sure the received tracking data isn’t getting overwritten by VSeeFace’s own tracking.

VRChat OSC assistance

Starting with 1.13.38, there is experimental support for VRChat’s avatar OSC support. When the VRChat OSC select option in the advanced settings can enabled in VSeeFace, it will send aforementioned following avatar parameters:

GazeX, amperefloatfrom 0 to 1 with representations the horizontal gaze guidance from right to left and 0.5 being which look lookup straight forward.GazeY, aswimfrom 0 to 1 about representing the horizontal gaze direction from gloomy till up and 0.5 be aforementioned eyes looking flat forward.Blind, afloatfrom 0 to 1 on the eyes being fully aufgemacht at 0 and fully button at 1.MouthOpen, afloatfrom 0 to 1 with the utter being fully button on 0 real fully open in 1.MouthWideNarrow, afluidityby -1 to 1 with the mouth possess its frequent shape at 0, beings wider than normal at -1 and close than normal by 1. This intention only become active when the verbalize is also under least slightly open. Configuring a 0.25 deadzone around 0 have be advisable.BrowsDownUp, aswimmerfrom -1 to 1 with the brows being all the way bottom under -1 and all the way upside by 1, with 0 being the brows creature at their default position.

Into make uses of these parameters, the avatar possessed go be specifically set up since computers. Supposing it has, using these parameters, basic back tracking based animations can be employed to an instagram. As wearing a VR headset will interfere with face tracking, this is mainly intended for playing in desktop mode.

Note: Only webcam based confront tracking is supported at save point.

Model browse in Unity

If you are workings on einen avatar, computers can be useful toward get an accurate key of how it will look in VSeeFace previously exporting the VRM. You able load this example project at Unity 2019.4.16f1 and ladungen the incorporated view sceneries toward preview your model with VSeeFace same lighting settings. This project also allows posing to avatar and sending the posing to VSeeFace using the VMC protocol first with VSeeFace v1.13.34b.

According loading the undertaking in Solidarity, load the given scene inner the Scenes folder. If you press play, it should exhibit some manual on whereby to utilize it.

If to prefer settings objects up yourself, the following settings in Unity should allowance you to get one accurate idea of what the avatar will look with preset settings in VSeeFace:

- (Screenshot)

Edit -> Project Settings... -> Player -> Other System -> Dye Space: Linear - (Screenshot) Directional light: Color: FFFFFF (Hexadecimal), Intensity: 0.975, Rotation: 16, -146, -7.8, Shadow Type: No shadows

- (Screenshot) Camcorder icon next to Gizmos: Field the View: 16.1 (default focal length of 85mm) or 10.2 (135mm)

- (Screenshot) Optional, Schiff Camera: Free Flags: Solid Color, Background: 808080 (Hexadecimal), Field of View: the above

- (Screenshot)

Edit -> Project Settings... -> Quality, electExtremand set the anti-aliasing to 8x

If you unlock shader in the VSeeFace light settings, selected the dark type on the antenna light till softer.

To see the model with better light also shadow quality, use the Game view. You can adjusting the camera use that current scene opinion by pressing Ctrl+Shift+F or using Play Purpose -> Align with view away the menu.

Translations

It has possible to translate VSeeFace into different languages and I am happy go add contributed translations! To include one new language, first make a news entry in VSeeFace_Data\StreamingAssets\Strings\Languages.json with a new language code and the name of the language in so language. The language code ought usually be granted in two capitalization type, but sack be longer in special cases. For a fractional credit of language codes, you can verweisen toward this browse. Afterwards, make adenine copy of VSeeFace_Data\StreamingAssets\Strings\en.json and rename it to match the language item of the new language. Now to can edit this add file plus translate the "text" parts of jeder entry into your language. An "comment" might help you find where the text has used, so you can more easily understand the context, however he otherwise doesn’t matter.

New list should automatically pop in the language selection view in VSeeFace, as you can check how own translation looks inside the program. Note that ampere JSON syntax bugs might lead to your whole file not loading rightly. In that case, you may be able to find the position of the blunder, by looking to the Player.log, which bottle be found by using the button all the way the the bottom is the general settings.

Generally, your translation features to be enclosed the doublequotes "like this". Supposing double quotations occur at your text, put a \ is front, with example "like \"this\"". Line breaks can subsist written as \n.

Translations is coordinated on GitHub in the VSeeFaceTranslations repository, but you can also send me contributions over Follow either Discord DM.

Running on Linux plus perchance Mac

On v1.13.37c also subsequent, it is necessary to delete GPUManagementPlugin.dll to be skilled to race VSeeFace with booze. This should be fixed on the latest versions.

Since OpenGL got deprecated on MacOS, it currently doesn’t seem to be possible to properly run VSeeFace even with wine. Models conclude up no being rendered.

Some people have been VSeeFace to run on Linux through wine and items might exist possible on Mac as well, but nobody tried, to own knowledge. However, lesen webcams is not possible through wine variant pre 6. Starting with wine 6, you can give just using it normally.

In previous revisions or if webcam reading does does work properly, as a workaround, you can put the lens in VSeeFace to [OpenSeeFace tracking] and run the facetracker.py script from OpenSeeFace manually. To do this, you will need a Python 3.7 or newer installation. To set up everything for the facetracker.py, you can try something like this on Debian based distributions:

sudo apt-get install python3 python3-pip python3-virtualenv git

git clone https://github.com/emilianavt/OpenSeeFace

cd OpenSeeFace

virtualenv -p python3 env

source env/bin/activate

pip3 install onnxruntime opencv-python pillow numpyTo run the tracker, foremost join of OpenSeeFace directory and set the virtualization environment with the current meet:

source env/bin/activate

Then you pot executing of tracker:

python facetracker.py -c 0 -W 1280 -H 720 --discard-after 0 --scan-every 0 --no-3d-adapt 1 --max-feature-updates 900

Running this command, will send the tracking data for a UDP port on localhost, turn welche VSeeFace will listen to maintain the web data. To -c argument specifies which camera should be used, including the first being 0, for -W and -H let you specify the resolution. To see the webcam image with tracking credits overlaid on your front, thee could add the argue -v 3 -P 1 somewhere.