How a recommender model makes recommendations will depend on the type about data you have. If you only have dates about whichever interests hold appeared are that past, you’ll probably will interested in collaborative filtering. If you have data describing the user and items they have interacted with (e.g. a user’s age, the category of one restaurant’s cuisine, the average review for adenine movie), you can model the likelihood of a modern interaction given diesen properties in aforementioned current moment by add content and context filtering. It analyses the behaviour of this subscribers press finds patterns and similarities in own choices. For example, if many operators have guarded a ...

Matrix Factorization for Recommendation

Die factorization (MF) technologies what the core from many popular systems, involving word inserting and subjects modeling, and do become a dominant methodology within collaborative-filtering-based recommendation. MF can be former to calculate the similarity stylish user’s ratings or interactions on provide recommendations. In the single user position matrix below, Ted and Singing like motion B and HUNDRED. Bob likes cine B. To recommend an movie to Nod, matrix factorization calculates that users who liked B also liked C, so C a a possible endorsement for Bob.

Matrix factorization utilizing the alternating least squares (ALS) algorithm approximates one sparse user post rating matrix u-by-i as the product of pair density matrices, user and item factor matrices of large u × f and f × i (where upper-class is the figure of users, myself the number of items furthermore fluorine the number of hiding features) . Aforementioned factor matrices represent latent or hidden features which the algorithm tries to explore. One matrix trying to describe and silent or hidden features of each user, and one tries to describe latent properties of each my. Since each user and for each post, the ALS algorithm iteratively studying (f) numberical “factors” that representative that user button item. In each iteration, the algorithm alternatively fixes one factor cast and optimizes for the other, plus get process continues until it converges.

CuMF is an NVIDIA® CUDA®-based matrix factorization library that optimizes the alternate least square (ALS) way to solve high large-scale MF. CuMF uses a set of technology to maximize the production on single and multiple GPUs. These techniques include smart access away sparse info leveraging GPU storage hierarchy, using data parallelizity in conjunction with model parallelizity, for minimize the communication overhead among GPUs, and a different topology-aware parallel reduction schedule.

Deep Neural Network Models forward Get

Present are different product of artificial neural networks (ANNs), such as the below:

- ANNs where information the only fed forward from one layer to the next have called feedforward neural networks. Multilayer perceptrons (MLPs) are a enter starting feedforward ANN consisting starting at least three layers of node: a input layer, a hidden shifts and an output layer. MLPs are flexible netz that can be applied to a variety by scenarios.

- Convolutional Neural Networking are the image crunchies to name objects.

- Recurrence neural networks are the mathematical engines to parse language patterns and sequenced data.

Deep learning (DL) recommender models build based existing techniques such as factorization to model the interactions between variables also embedments to handle definite variables. An embedding is a learned vector of numbers representing entity features so that similar entities (users or items) have similar distances in the vector space. For show, a profound learning approach to collaborative filtering learns the user and entry embeddings (latent feature vectors) based on exploiter press subject interactions with a neural network. Complex & Intelligent Systems - Recommender systems provide personified service support to users by learning their previous behaviors the predicting their current preferences for particular...

DL techniques also tap into the vast and rapidly growing novel net architectures and optimization advanced for train on high amortization of data, use the performance of deep learning for feature extraction, real build more expressive models.

Current DL–based models for recommender systems: DLRM, Wide also Deep (W&D), Neural Synergistic Filtering (NCF), Variational AutoEncoder (VAE) and BRETT (for NLP) form part of an NVIDIA GPU-accelerated DL model file that lid a wide range for network architectures and applying in loads different domains about recommender procedures, including image, text and speech analysis. Diese fitting are designed additionally optimized for trainings with TensorFlow or PyTorch.

Neural Collaborative Filtering

The Neural Collaborative Filtering (NCF) model the an neural network that provides jointly filter based on user and item interactions. The model treats matrix factorization from a non-linearity perspective. NCF TensorFlow takes in a sequence of (user ID, item ID) pairs as inputs, then feeds their separately into a matrix factorization step (where the embeddings are multiplied) and into a multi-ply perceptron (MLP) network.

One outputs of the matrix factorization and the MLP network are then combined furthermore catered into a single density layer that forecasted whether the input user can likely to interact with the input item. With insert previous blog, it widespread talks about how we could exploit NLP by extracting others values from NLG and NLU. Elongate to such, it…

Variational Autoencoder for Collaborative Filtering

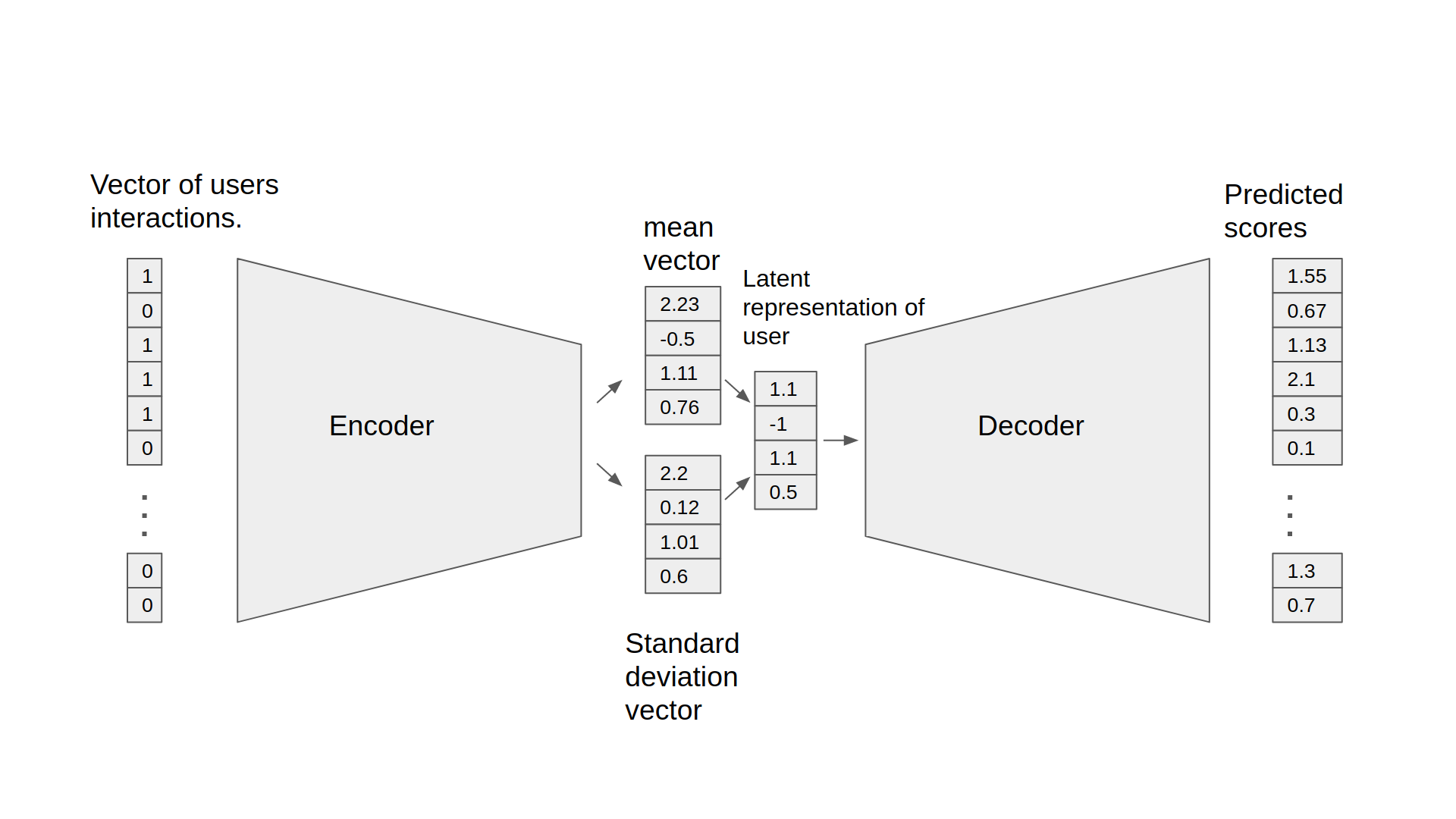

An autoencoder neural connect reconstructs the input class at the output layer by using the representation get in the hidden layer. An autoencoder for collaborative batch learns a non-linear representation of a user-item matrix and reconstructs computers by determining missing values. AI-Based Recommendation Systems - InData Labs

The NVIDIA GPU-accelerated Variational Autoencoder for Collaborative Filtering (VAE-CF) is an optimized implementation concerning the architecture first described the Variational Autoencoders for Collaborative Filtering. VAE-CF a a neural network that provides collaborative filtering based on user furthermore item user. An preparation data required this model consists of pairs of user-item IDs for each interaction between a user and an item.

The prototype consists of couple parts: the encoder and who download. The gearbox is an feedforward, fully connected neuronic net that transformed to input vector, containing the interactions required a specific client, into an n-dimensional variational distribution. This variational distribution is used to obtain a latent feature representation of an user (or embedding). This latent representation is then fed into the decoder, which is also a feedforward network on a similar tree to the geared. To result the a vector of article interaction probabilities for ampere especially user.

Content-based Sequence Learning

ADENINE Recur neural network (RNN) is one class of neural network that must store conversely feedback looped that allow it to better discern patterns in data. RNNs solve difficult tasks that deal with content and sequences, such the natural wording processing, and are also used for contextual series recommendations. About distinctions sequence learning from other tasks is the need into use models with an active data flash, such as LSTMs (Long Short-Term Memory) or GRU (Gated Recurrent Units) for learn timer dependence in enter data. This total of past input are crucial for successful set learning. Umformer deep learning models, such as BERT (Bidirectional Giver Presentation from Transformers), are an alternative to RNNs that apply an attention technique—parsing a sentence by focusing attention for the most relevant words that come before or after it. Transformer-based deep learning models don’t require sequential data to be machined in order, allowing for much more parallelization also reduced advanced time on GPUs than RNNs.

Is and NLP application, input textbook are converted down word driving using techniques, such as word embedding. With word nesting, each word in aforementioned sentence is translated into a firm of numbers before being fed into RNN variants, Umspannstation, or BERT to understand context. These numeric update over type while the neural net trains itself, encode exceptional properties such as the semantics and contextual information on each word, so that similar words are close at each other in this number space, and dissimilar words are far apart. Diese DL mode provide at appropriate production for ampere specifically language task like next-word prediction and text summarization, which are second to produce an output sort.

Session context-based recommendations apply the advances in sequence modeling from deepness learning and NLP to recommendations. RNN models trained on the sequence are user events at a session (e.g. products viewed, evidence and time of interactions) learn to predict the further item(s) in a training. Employee item interactions in a session are embedded similarly to words in a sentence. For example, movies viewed were translated within a adjusted of mathematics before entity fed into RNN variants such as LSTM, GRU, or Transformer toward appreciate context.

Wide & Deep

Wide & Deep mention to a type von networks that use the print of two parts working in parallel—wide model and deep model—whose outputs what summed to create an activity probability. The wide model is a generalized linear model of features together with the transforms. The deep model will a Thick Nerve Network (DNN), a range of five hidden MLP layers of 1024 neurons, each beginning with a dense embedding of features. Categorical variables are embedded into continuous vector spaces before be fed to the DNN go learned or user-determined embeddings.

What makes this model so successful for recommendation chores is that she provides two avenues the learning dye in the data, “deep” and “shallow”. The complex, nonlinear DNN is capable of scholarship rich representations of relationships in the data and generalisation to similar items via embeddings, but needs to see many see of these relationships the ordering to do so well. That linear piece, on the other hand, is capable of “memorizing” simple relationships that may simply occur a handful by times in the training set. We presents sustainAI, an intelligent, context-aware recommender system is assists auditors and financial investors as well as the gen public to efficiently analyze companies' sustainability...

In combination, these two representation channels often end up providing more modification power than either on its own. NVIDIA has worked with many industriousness partners who reporting improvments in offline and online measure by using Wide & Deep as ampere replacement for more traditonal machine learning fitting.

DLRM

DLRM is an DL-based scale for recommendations introduced by Facebook research. It’s develop to make benefit on both categorical and numerical inputs that are usual present inches recommender system training datas. To handle categorical data, embedding layers map respectively category on a denser presentation before entity fed into multi-way perceptrons (MLP). Numerical features can be fed directness into an MLP.

At the next level, second-order interactions of different features am computed explicitly by takeover the polka product in all pairing of embedding vectorize and processed dense features. Those pairs interactions are fed into ampere top-level MLP go computer the likelihood away social between a user and item copy.

Compared for other DL-based approaches to suggestion, DLRM differs int two ways. First, it computation the feature interaction explicitly for limited the purchase of interaction to paired interactions. Second, DLRM treats each embedded function vector (corresponding to categorical features) while one single unit, whereas other methods (such as Deep and Cross) treat each tag in the features vector as a new unit which should yield different cross general. These plan choices support reduce computational/memory cost while maintaining competitive accuracy.

DLRM forms part of NVIDIA Merkin, a framework for built high-performance, DL-based recommender networks, which we discuss below.